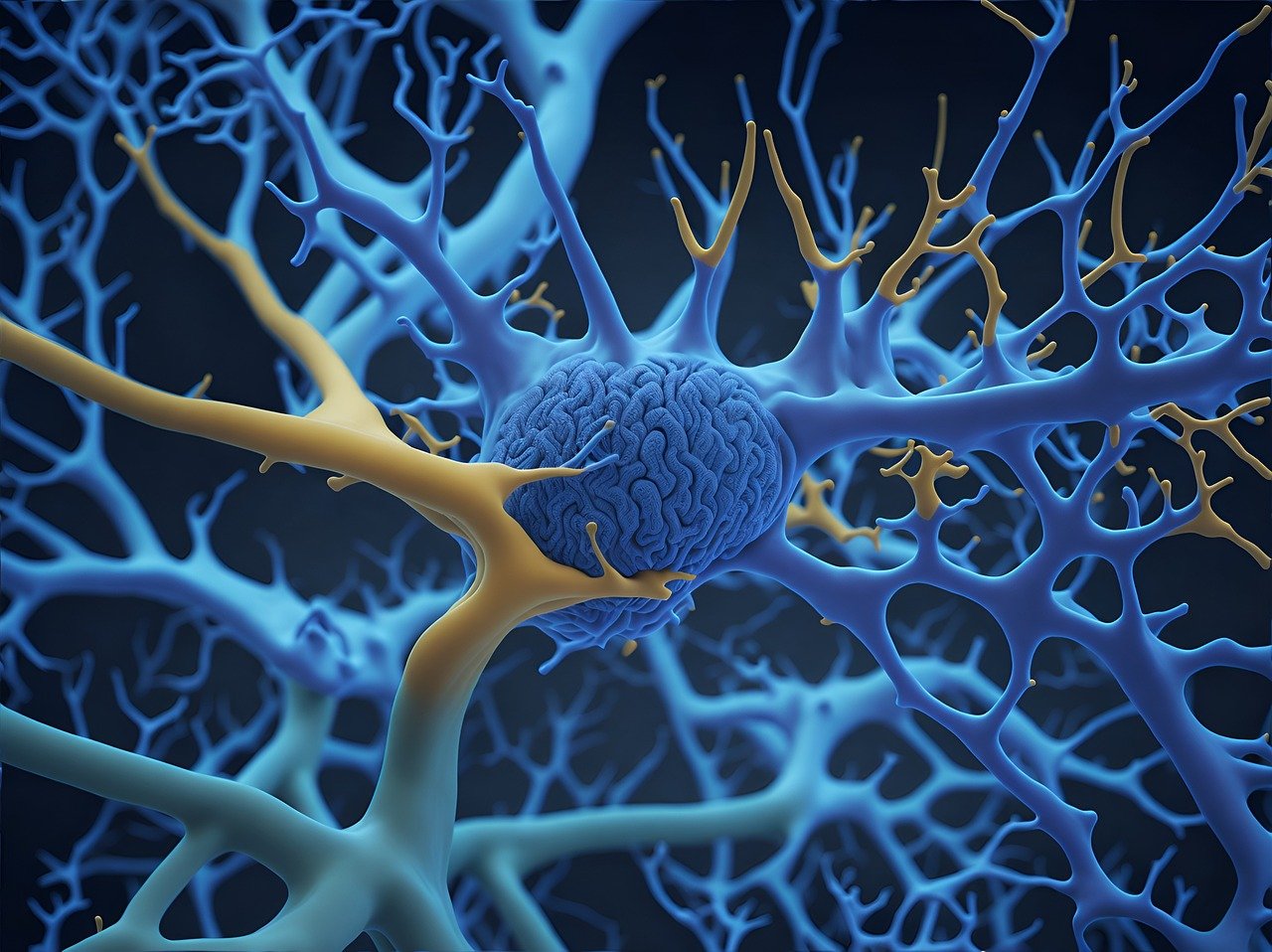

Neuromorphic computing is a cutting-edge field in computer science and artificial intelligence (AI) that seeks to mimic the structure and functioning of the human brain using advanced hardware and software systems. The term “neuromorphic” comes from “neuro,” referring to the brain or nervous system, and “morphic,” meaning shape or form. In essence, neuromorphic computing aims to design computers that function more like biological brains, using brain-inspired models for information processing.

While traditional computers operate based on binary logic (ones and zeros) and are built using the von Neumann architecture—a system that separates memory from computation—neuromorphic systems aim to replicate how neurons and synapses in the brain work together to process information in a highly efficient, parallel, and power-saving manner. These systems offer the potential to revolutionize areas like artificial intelligence, robotics, and machine learning by providing more energy-efficient, adaptive, and scalable computing platforms.

This article will explore the key principles behind neuromorphic computing, how it works, its architecture, and its potential applications, as well as the challenges faced in this emerging field.

Understanding Neuromorphic Computing

Neuromorphic computing is inspired by the brain’s ability to process vast amounts of information in real time while using very little power. The human brain, with its billions of neurons connected by trillions of synapses, can process complex sensory inputs, learn from experiences, adapt to new situations, and make decisions much faster and more efficiently than traditional computers.

Key Concepts of Neuromorphic Computing:

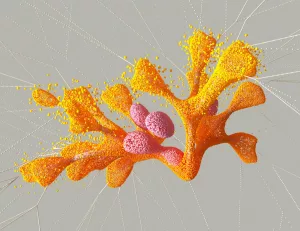

- Neurons and Synapses: Neurons are the basic processing units in the brain, and synapses are the connections between neurons that facilitate communication. Neuromorphic systems use artificial neurons and synapses to simulate how biological neurons send and receive electrical signals.

- Parallel Processing: Unlike traditional computers, which typically process tasks sequentially, the brain processes information in parallel. Neuromorphic systems are designed to mimic this by running multiple processes at the same time, enabling faster computation.

- Event-Driven Processing: Neuromorphic systems do not process information continuously like traditional computers. Instead, they use event-driven processing, where information is only processed when there is a change or event, much like how the brain reacts to stimuli. This approach significantly reduces power consumption.

- Spiking Neural Networks (SNNs): In neuromorphic computing, spiking neural networks are used to mimic the brain’s communication style. Instead of continuous signals, neurons in the brain communicate via electrical spikes, which are short bursts of voltage. Neuromorphic systems replicate this using SNNs, where artificial neurons “fire” spikes of information when they reach a certain threshold.

- Plasticity and Learning: One of the key features of the brain is synaptic plasticity, which allows it to adapt and learn over time. Neuromorphic systems incorporate learning mechanisms, such as Hebbian learning (where synaptic connections are strengthened between neurons that fire together), to mimic the brain’s ability to improve and adapt through experience.

Neuromorphic computing promises to overcome some of the limitations of traditional computing systems, particularly in the areas of power efficiency and the ability to process complex sensory information in real-time.

How Neuromorphic Computing Works

Neuromorphic computing works by creating hardware systems that imitate the architecture and function of the brain’s neural networks. This involves building neuromorphic chips that are fundamentally different from traditional central processing units (CPUs) and graphics processing units (GPUs).

Neuromorphic Hardware

Neuromorphic systems are typically built using specialized hardware designed to mimic the parallel architecture of the brain. Some of the most notable neuromorphic hardware projects include:

- IBM’s TrueNorth: One of the first large-scale neuromorphic chips, TrueNorth, developed by IBM, contains over a million artificial neurons and 256 million synapses. It processes information in parallel and is designed to be highly energy-efficient, mimicking the event-driven processing of the brain.

- Intel’s Loihi: Intel’s neuromorphic chip, Loihi, is another key player in the field. Loihi features spiking neural networks that can learn and adapt in real-time, much like biological neural networks. The chip incorporates plasticity rules that allow it to strengthen or weaken synaptic connections based on the patterns it detects, enabling it to improve its performance over time.

- SpiNNaker: Developed by the University of Manchester, SpiNNaker (Spiking Neural Network Architecture) is a neuromorphic computing platform that uses a network of many small, interconnected processors to simulate millions of neurons in real-time. SpiNNaker is particularly useful for simulating large-scale brain models.

Architecture of Neuromorphic Systems

Traditional computing architectures are based on the von Neumann model, which separates memory and processing. In contrast, neuromorphic systems are designed to mimic the brain’s architecture, where memory and processing are closely integrated. This is achieved by creating neuromorphic processors that consist of artificial neurons and synapses capable of both storing information and performing computation simultaneously.

Key elements of neuromorphic architectures include:

- Spiking Neurons: Neuromorphic chips use artificial neurons that communicate via spikes, similar to how biological neurons send electrical signals. When an artificial neuron reaches a certain activation threshold, it fires a spike, which is then transmitted to other neurons through artificial synapses.

- Synaptic Connectivity: In neuromorphic systems, artificial synapses are used to connect neurons. These synapses can adjust their strength based on the activity of the connected neurons, enabling the system to learn from data in a way that mimics synaptic plasticity in the brain.

- Event-Driven Operation: Neuromorphic systems operate in an event-driven manner, meaning that neurons only fire when they receive sufficient input to reach a threshold. This makes the system highly energy-efficient because it only processes information when necessary, unlike traditional processors that continuously compute even when idle.

- Learning and Adaptation: Many neuromorphic chips are designed to support real-time learning and adaptation. This is achieved through mechanisms like spike-timing-dependent plasticity (STDP), which adjusts the strength of synapses based on the timing of spikes between neurons. This allows neuromorphic systems to learn from patterns in the data, much like the human brain learns from experience.

Neuromorphic Software and Algorithms

While hardware is a critical component of neuromorphic computing, software and algorithms are equally important for implementing brain-like processing. Neuromorphic computing uses specialized algorithms designed to work with spiking neural networks (SNNs) and other brain-inspired models.

Spiking Neural Networks (SNNs)

Unlike traditional artificial neural networks (ANNs) used in deep learning, spiking neural networks (SNNs) are designed to more closely resemble the behavior of biological neurons. In SNNs, neurons communicate by sending discrete electrical spikes, and the timing of these spikes is crucial for information processing.

In SNNs, the strength of synaptic connections changes based on the timing of spikes, allowing the network to learn from patterns in data. This time-dependent learning process is known as temporal coding, where the timing of spikes carries information. SNNs are particularly well-suited for tasks that require real-time processing, such as robotics, sensory processing, and dynamic pattern recognition.

Learning Mechanisms in Neuromorphic Systems

Neuromorphic systems use various learning mechanisms to adapt to new data and improve performance over time. Some of the most common learning mechanisms include:

- Hebbian Learning: Often summarized as “cells that fire together, wire together,” Hebbian learning strengthens the connections between neurons that are activated simultaneously. This principle is used in neuromorphic systems to reinforce patterns that occur frequently in the data.

- Spike-Timing-Dependent Plasticity (STDP): STDP is a biologically inspired learning rule that adjusts synaptic weights based on the precise timing of spikes between neurons. If one neuron consistently fires just before another, the connection between them is strengthened. If the timing is reversed, the connection weakens.

- Reinforcement Learning: Some neuromorphic systems incorporate reinforcement learning algorithms, where the system receives feedback from its environment and adjusts its behavior to maximize a reward. This is similar to how animals learn from positive and negative outcomes.

Applications of Neuromorphic Computing

Neuromorphic computing has the potential to transform a wide range of industries by providing more efficient, adaptive, and scalable computing solutions. Some of the most promising applications include:

1. Artificial Intelligence and Machine Learning

Neuromorphic computing is expected to play a major role in advancing AI and machine learning. Neuromorphic systems can process information in real-time, adapt to new data, and learn from experience, making them ideal for tasks such as:

- Real-time pattern recognition

- Speech and image recognition

- Autonomous decision-making

- Natural language processing

2. Robotics

Neuromorphic computing has significant potential in robotics, particularly in the development of autonomous robots that can learn from their environment and make decisions on the fly. Neuromorphic processors can enable robots to process sensory information in real-time, navigate complex environments, and interact with humans more naturally.

3. Healthcare and Neuroscience

Neuromorphic systems can be used to simulate the brain and study neurological disorders such as Alzheimer’s disease, Parkinson’s disease, and epilepsy. By creating large-scale models of the brain, researchers can gain insights into how neural circuits function and develop new treatments for neurological conditions.

4. Energy-Efficient Computing

One of the most significant advantages of neuromorphic computing is its energy efficiency. Traditional computers consume large amounts of power, particularly for tasks like deep learning and AI. Neuromorphic systems, by contrast, use far less power by processing information in parallel and only when events occur. This makes them ideal for energy-constrained environments such as mobile devices, wearable technology, and Internet of Things (IoT) devices.

5. Edge Computing

Neuromorphic computing is well-suited for edge computing, where data is processed close to the source of data generation (e.g., sensors or devices) rather than in a centralized cloud server. Neuromorphic systems’ ability to process data locally, in real-time, with minimal power consumption makes them ideal for applications such as smart cities, autonomous vehicles, and industrial automation.

Challenges and Future Directions

Despite its promise, neuromorphic computing faces several challenges that must be addressed to realize its full potential:

Complexity of Brain Emulation: The human brain is highly complex, and replicating its functionality in hardware and software remains a significant challenge. While current neuromorphic systems can simulate small neural networks, scaling up to brain-level complexity will require further advances in hardware and algorithms.

Standardization: Neuromorphic computing is still an emerging field, and there is a lack of standardization in terms of hardware architectures, programming models, and benchmarks. Establishing industry-wide standards will be critical for advancing the field and enabling broader adoption.

Programming Complexity: Developing algorithms for neuromorphic systems is more complex than for traditional computers. Researchers are still working on creating user-friendly programming frameworks that can make neuromorphic computing more accessible to developers and engineers.

Hardware Limitations: While neuromorphic chips such as IBM’s TrueNorth and Intel’s Loihi have made significant progress, there are still limitations in terms of processing power, scalability, and efficiency. Future research will focus on overcoming these hardware limitations to create more powerful and flexible neuromorphic systems.

Conclusion

Neuromorphic computing represents a bold and innovative approach to building computing systems that mimic the architecture and functioning of the human brain. By incorporating principles such as parallel processing, event-driven computation, and spiking neural networks, neuromorphic systems offer the potential to revolutionize fields such as artificial intelligence, robotics, healthcare, and energy-efficient computing.

Although neuromorphic computing is still in its early stages, its promise is clear: it offers a new paradigm of computing that is more efficient, adaptive, and capable of processing complex, real-world data in ways that traditional computers cannot match. As researchers continue to develop more advanced neuromorphic chips and algorithms, this field will likely play a pivotal role in shaping the future of technology.