Artificial Intelligence (AI) is becoming an integral part of our daily lives, with applications in various industries such as healthcare, finance, transportation, and more. However, as the complexity of these systems increases, it becomes harder to understand how decisions are being made. Explainable AI (XAI) is an emerging field that aims to make AI models more transparent and interpretable, so that their decisions can be better understood and trusted by humans. One of the main challenges in AI is that many machine learning models are “black boxes” that are difficult to understand. These models can make accurate predictions, but it is hard to understand how they arrived at those predictions. This can be a problem when it comes to making important decisions, such as whether to approve a loan application or diagnose a medical condition. In some cases, these decisions can have a significant impact on people’s lives, and it is essential to understand the reasoning behind them. XAI addresses this issue by developing techniques and approaches that make machine learning models more interpretable and transparent. There are several different methods that can be used to achieve this, including:

Model Interpretation

Model interpretation involves developing techniques that allow us to understand the internal workings of a machine learning model, such as how it is using different features of the data to make predictions. This can be done through methods such as:

- Feature Importance Analysis: This technique helps to identify which features in the dataset are most influential in the model’s decision-making process. For example, in a credit scoring model, feature importance analysis might show that income level and credit history are the most critical factors.

- Partial Dependence Plots: These plots show the relationship between a selected feature and the predicted outcome, while accounting for the average effect of all other features. This allows for a visual understanding of how changes in a particular feature affect predictions.

- Decision Trees: A decision tree provides a straightforward interpretation by showing a flowchart-like structure where each internal node represents a decision based on a feature. This makes it easier to trace the decision path for a given prediction.

These methods allow us to gain insight into how the model is making decisions and identify any potential biases or errors in the data.

Visualization

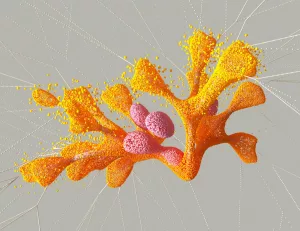

Visualization is a powerful tool for making complex data and models more accessible. There are a number of different visualization techniques that can be used to make machine learning models more interpretable, such as:

- Decision Trees: As mentioned, decision trees can be visualized to provide a clear, hierarchical view of decision paths and outcomes.

- Feature Importance Plots: These plots graphically represent the importance of each feature used by the model, allowing stakeholders to quickly identify key drivers of model decisions.

- Partial Dependence Plots: Beyond numerical insights, these plots offer a graphical view of feature effects, making it easier for non-experts to grasp complex relationships.

These visualizations can help to make the model’s decision-making process more transparent and easier to understand for non-experts.

Transparency by Design

This approach involves designing machine learning models that are inherently more transparent and interpretable, such as:

- Decision Lists: These are ordered sets of if-then rules that directly map inputs to outputs. Their simplicity allows for straightforward interpretation and verification.

- Decision Sets: Unlike lists, decision sets are unordered collections of rules that provide flexibility while maintaining interpretability.

- Rule-Based Models: These models use a series of logical rules to make decisions, which can be easily understood and adjusted by humans.

By designing models that are transparent from the start, organizations can ensure that the model’s decisions align with their values and ethical principles.

Post-Hoc Explanations

Post-hoc explanations are techniques that can be used to explain the decisions of a black-box model after the fact. These techniques include:

- Feature Importance: Similar to pre-design interpretation, this post-hoc method helps clarify which features were most influential in specific predictions.

- Sensitivity Analysis: This involves analyzing how the variation in model output can be attributed to different inputs, providing insights into model robustness and decision pathways.

- Local Interpretable Model-Agnostic Explanations (LIME): LIME is a method that can be used to explain the predictions of any black-box classifier by training a simple interpretable model in the local neighborhood of the prediction.

These methods allow organizations to understand the reasoning behind specific decisions made by the model, even if the overall model is complex and opaque.

Industry Applications and Case Studies

XAI has the potential to make a significant impact in many industries. Let’s delve deeper into how XAI is being applied across various sectors:

Healthcare

In the healthcare industry, XAI can be used to improve the interpretability of models used for diagnosing medical conditions, which can help doctors make more informed decisions. For instance, a model predicting the likelihood of patient readmissions could highlight key risk factors such as age, previous hospital visits, and specific medical history. By understanding these, healthcare providers can offer targeted interventions.

A real-world example is IBM Watson Health, which leverages XAI to provide doctors with interpretable insights from vast medical datasets. This helps in diagnosing rare diseases by drawing parallels from similar historical cases, offering a transparent view of how conclusions are derived.

Finance

In finance, XAI can be used to improve the interpretability of models used for credit risk assessment, which can help lenders make more informed decisions. By employing techniques such as feature importance analysis, financial institutions can understand why certain applicants are approved or denied loans. This is crucial for compliance with regulations like the Fair Credit Reporting Act, which mandates transparency in credit decisions.

A notable case is the use of XAI by FICO, a company known for its credit scoring systems. FICO uses explainable models to provide reason codes for credit scores, which helps consumers understand the factors affecting their scores and take corrective actions.

Autonomous Vehicles

In autonomous vehicles, XAI can be used to improve the interpretability of models used for decision-making, which can help to ensure that the vehicles are safe and reliable. For example, understanding why a car decides to change lanes or apply brakes can be critical for both development and public trust. XAI techniques such as decision trees and rule-based approaches can provide insights into these decisions, making it easier for engineers to troubleshoot and improve vehicle safety.

Tesla, a leader in autonomous driving technology, is actively exploring XAI to enhance the transparency of its systems, aiming to demonstrate how their algorithms make real-time driving decisions.

Balancing Interpretability and Accuracy

Achieving interpretability and transparency in machine learning models is not a trivial task. It requires a combination of the right methods, techniques, and domain knowledge. Additionally, interpretability and transparency are not always in opposition to performance. It’s possible to have models that are both accurate and interpretable, but it may require some trade-offs. Let’s explore this balancing act further.

Trade-offs and Strategies

- Simplicity vs. Complexity: A simple and interpretable model may not have the same level of accuracy as a more complex model. For instance, a linear regression model is inherently more interpretable than a deep neural network but might not capture intricate patterns in data as effectively.

- Hybrid Models: One strategy is to use a combination of complex models for prediction and simpler models for interpretation. This means the primary model handles accuracy, while a secondary model provides interpretability.

- Iterative Approach: Developing models iteratively allows for incremental improvements in both accuracy and interpretability. By continuously refining models and incorporating feedback from stakeholders, organizations can achieve an optimal balance.

Therefore, it’s important for organizations to carefully consider the trade-offs between interpretability and performance when developing AI systems.

Addressing Bias and Fairness

Another important aspect of XAI is the ability to detect and address bias in machine learning models. As data is often collected and labeled by humans, it can contain biases that are unconsciously introduced. These biases can lead to unfair and unjust decisions when the models are used to make predictions. XAI can help to detect and address these biases by providing insights into how the model is making decisions and identifying any potential sources of bias in the data.

Techniques for Bias Detection

- Bias Auditing: Regular audits of model outputs can identify patterns of unfairness. This involves analyzing predictions across different demographic groups to ensure equity.

- Fairness Constraints: Implementing fairness constraints within the model development process can help prevent biased outcomes. These constraints ensure that the model does not disproportionately disadvantage any particular group.

- Re-sampling and Re-weighting: Adjusting the dataset to balance representation or applying weights to underrepresented groups can mitigate bias.

Real-world Example

Consider a hiring algorithm that inadvertently favors candidates of a certain demographic due to historical data biases. By using XAI, the organization can identify biased patterns in feature importance and adjust the model or dataset to ensure a fairer hiring process.

Building Trust and Ethical AI

XAI is also crucial for building trust with customers and regulators. AI systems that are more interpretable and transparent can help to reduce bias, increase fairness, and improve trust in the technology. Furthermore, XAI can help to ensure that AI systems are aligned with societal values and ethical principles. This is becoming increasingly important as AI is being used in more critical decision-making scenarios and it’s crucial that people can trust the decisions being made by these systems.

Trust through Transparency

- Regulatory Compliance: Many industries are subject to regulations that require transparency in decision-making processes. XAI helps meet these compliance requirements by providing clear explanations of model decisions.

- Consumer Confidence: When customers understand how AI systems work, they are more likely to trust and adopt the technology. This trust can be crucial in sectors like healthcare and finance, where decisions have significant personal impact.

- Ethical AI Practices: By aligning AI systems with ethical guidelines and societal values, organizations can ensure responsible AI deployment. This involves not only technical transparency but also clear communication of AI capabilities and limitations.

Future Directions and Challenges

As the field of AI continues to evolve, it’s crucial that we continue to research and develop methods for making AI more interpretable and transparent. This will not only benefit organizations and industries but also society as a whole. By making AI more explainable, we can increase trust in the technology, reduce bias, and increase fairness.

Areas for Future Research

- Advanced Visualization Techniques: Developing new ways to visualize complex models and data relationships can further enhance interpretability.

- Cross-disciplinary Collaboration: Combining insights from fields such as psychology, sociology, and law can lead to more holistic approaches to XAI.

- Automated Explanation Generation: Research into automated systems that generate human-readable explanations could revolutionize how we interact with AI.

Challenges to Overcome

- Scalability: Ensuring that XAI techniques can be applied to large-scale systems without prohibitive computational costs.

- Dynamic Systems: Models that continuously learn and adapt pose challenges for maintaining transparency and interpretability.

- User-centric Design: Creating XAI systems that cater to the needs of diverse users, from technical experts to laypersons, requires thoughtful design and usability considerations.

In summary, XAI is a critical step towards creating responsible and trustworthy AI systems that are beneficial for all. As organizations increasingly use AI to make important decisions, it’s vital to understand the reasoning behind these decisions. It’s also crucial to detect and address any bias and align AI systems with societal values and ethical principles. Through continued innovation and collaboration, we can ensure that AI serves as a force for good, driving progress and equity across all sectors.