In the dynamic field of artificial intelligence (AI), the choice of computational hardware is pivotal, often determining the pace and feasibility of advancements. While Central Processing Units (CPUs) have long been the standard for computing tasks, Graphics Processing Units (GPUs) have emerged as the powerhouse for AI, particularly in machine learning and deep learning. This article delves into the multifaceted reasons behind GPUs’ dominance in AI, providing a thorough understanding of their superior capabilities compared to CPUs. The Genesis of GPU’s Role in AI The story of GPUs in AI is not just about hardware but also about the evolution of AI itself. Initially designed to accelerate graphics rendering, GPUs’ potential was recognized by AI researchers who found their architecture immensely beneficial for neural network training. This serendipitous discovery has led to GPUs becoming synonymous with AI development.

Parallel Processing: The Core of GPU Efficiency

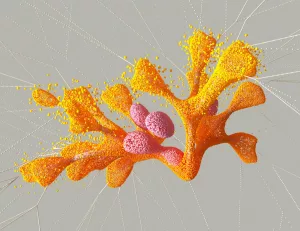

The crux of GPU superiority in AI lies in its parallel processing prowess. Unlike CPUs, which have fewer cores optimized for sequential processing, GPUs boast thousands of smaller, specialized cores capable of performing multiple operations concurrently. This is particularly advantageous for AI algorithms, which inherently involve numerous parallel computations, especially in tasks like matrix multiplication and data processing across neural networks.

Understanding GPU Architecture

To appreciate the power of GPUs, it helps to understand their architecture. A typical GPU might have thousands of cores. For example, an NVIDIA RTX 3090 features over 10,000 CUDA cores. These cores work together to perform massive parallel calculations, which is crucial for the data-intensive tasks of deep learning. This architecture allows for efficient handling of operations like convolution, which is a staple in image processing algorithms.

Real-World Parallel Processing Example

Consider the training of a convolutional neural network (CNN) for image recognition. Each image is broken down into data points, and these data points are processed through convolutional layers. GPUs can handle these operations simultaneously across multiple data points, dramatically speeding up the training process compared to CPUs, which would need to process these steps sequentially.

The Impact on Deep Learning

Deep learning algorithms, characterized by complex neural networks and massive datasets, benefit immensely from this parallel processing. Training a neural network involves numerous computations that are inherently parallelizable—perfect for GPU’s architecture. This capability not only accelerates the training process but also enables the handling of larger, more complex models, pushing the boundaries of what’s achievable in AI.

Case Study: ImageNet Competition

A landmark in deep learning was the ImageNet competition, where models trained on GPUs consistently outperformed those trained on CPUs. The ability to quickly iterate and fine-tune models with vast amounts of data proved essential, and GPUs were at the heart of this revolution. This competition demonstrated how GPUs could handle the immense computational load of deep learning tasks, setting the stage for future AI innovations.

Specialized Hardware Features

Over the years, GPUs have evolved, with manufacturers integrating specialized hardware features to further boost AI performance. For instance:

- Tensor Cores: Found in NVIDIA’s GPUs, these cores are optimized for the high-speed multiplication and addition of matrices, a staple operation in deep learning algorithms.

- Ray Tracing Cores: Although primarily designed for graphics, ray tracing cores can be repurposed for certain AI tasks, offering additional computational benefits.

These specialized features ensure that GPUs are not just general-purpose processors but are tailored to meet the specific demands of AI computations.

Exploring Tensor Cores

Tensor cores are a game changer for deep learning. They perform mixed-precision matrix multiply and accumulate calculations in a single operation, which is crucial for training deep learning models. This ability means that models can be trained more quickly and with greater efficiency, leading to faster iterations and quicker time-to-market for AI applications.

Memory Bandwidth and Data Throughput

AI algorithms, especially in machine learning, are data-intensive. GPUs address this challenge with significantly higher memory bandwidth compared to CPUs. This allows for faster data transfer within the GPU, minimizing bottlenecks and ensuring that the GPU cores are efficiently utilized, thereby enhancing the overall computational throughput in AI tasks.

Practical Tip: Leveraging GPU Memory

When working with large datasets, efficient data management is key. Using batch processing and ensuring that data is loaded into the GPU’s memory in chunks can prevent memory overflow and maximize the GPU’s processing capabilities. This approach allows for handling larger datasets without compromising on computational speed.

Ecosystem and Framework Support

The rise of GPUs in AI has been accompanied by the development of robust software ecosystems. Frameworks like TensorFlow, PyTorch, and CUDA are optimized for GPU acceleration, offering developers libraries and tools that abstract away the complexities of GPU programming. This ecosystem support has been crucial in democratizing AI development, allowing researchers and practitioners to leverage GPU capabilities without needing deep hardware expertise.

Enhancing Productivity with Frameworks

By using frameworks that support GPU acceleration, developers can focus on model design and improvement rather than low-level optimization. For example, PyTorch’s dynamic computation graph allows for more intuitive model debugging and iteration, making it a preferred choice for many researchers and developers working on cutting-edge AI projects.

Real-World Applications and Case Studies

The theoretical advantages of GPUs translate into tangible outcomes in various AI applications:

- Autonomous Vehicles: GPUs are at the heart of the computational systems in autonomous vehicles, processing vast amounts of sensor data in real time to make split-second decisions.

- Medical Imaging: In healthcare, GPUs accelerate the analysis of medical images, enabling faster and more accurate diagnoses.

- Natural Language Processing (NLP): GPUs have been instrumental in the training and deployment of large language models, facilitating advancements in translation, chatbots, and other NLP applications.

Detailed Example: Autonomous Vehicle Technology

In autonomous vehicles, GPUs process data from cameras, lidar, and radar to identify objects, predict their movement, and make driving decisions. The Tesla Model S, for example, uses a custom GPU to achieve the real-time processing necessary for its autopilot features, showcasing the critical role that GPUs play in the development of safe and reliable autonomous driving technologies.

What Role Do CPUs Play in AI Development?

While GPUs have taken center stage in the AI revolution, particularly for their ability to handle parallel processing tasks efficiently, CPUs maintain a crucial role in AI systems.

CPU’s Strengths in Sequential Processing

CPUs are designed to handle a wide range of tasks and are particularly adept at sequential processing. This makes them well-suited for the parts of AI algorithms that require complex decision-making, logic, and control flow operations, which are not inherently parallelizable. These tasks include the overall orchestration of system operations, data preprocessing, and managing the input/output operations that are essential for AI applications.

CPUs in Data Handling and Preprocessing

Before data can be utilized in GPU-accelerated AI models, it often requires significant preprocessing, including cleaning, normalization, and transformation. CPUs are typically more efficient at handling these tasks, especially when the data cannot be easily parallelized. They are also responsible for managing data flow between different system components, ensuring that GPUs are fed with a constant stream of data for processing.

Hybrid CPU-GPU Systems

In most AI systems, CPUs and GPUs work in tandem, with the CPU taking on the role of managing system resources, orchestrating the execution of programs, and handling tasks that require more general-purpose computing capabilities. The GPU, on the other hand, is dedicated to executing the parallel computations required for AI model training and inference.

Example of Hybrid System in AI

Consider a recommendation system for an online retailer. The CPU might handle user data processing and feature extraction, while the GPU trains and updates the recommendation model. This collaborative approach ensures that both the CPU and GPU are utilized to their fullest potential, resulting in a more efficient and effective AI system.

Energy Efficiency and Cost Considerations

In scenarios where energy efficiency or cost is a concern, CPUs might be favored for certain AI tasks. While GPUs are more efficient for parallel processing, they also consume more power and can be more expensive. For smaller-scale AI tasks or in environments where power consumption is a critical factor, optimizing algorithms to run efficiently on CPUs can be advantageous.

Balancing Performance and Cost

Organizations must weigh the benefits of GPU acceleration against the costs associated with power consumption and hardware investment. For instance, a startup may initially use CPUs to prototype AI models before scaling up with GPU-based solutions as their applications mature and require more computational power.

The Future of GPUs in AI

As AI continues to evolve, the symbiotic relationship between AI and GPU technology is expected to deepen. With advancements in GPU architecture, increased energy efficiency, and more specialized hardware features on the horizon, GPUs will continue to be at the forefront of AI research and application development.

Emerging Trends in GPU Technology

Looking ahead, developments such as quantum computing and neuromorphic chips may further shift the landscape. However, GPUs are likely to remain a staple in AI, given their adaptability and continuous evolution. Companies like NVIDIA are already exploring GPUs that offer better integration with AI-specific tasks, ensuring that they remain relevant as AI technology advances.

Common Mistakes in GPU Utilization

Despite their power, GPUs can be underutilized if not implemented correctly. Here are some common pitfalls and how to avoid them:

- Overloading the GPU: Attempting to process too much data at once can lead to memory overflow. It’s crucial to manage data flow efficiently.

- Ignoring Batch Processing: Failing to use batch processing can lead to inefficient computation. Always process data in batches that are optimal for the GPU’s memory.

- Mismatched Hardware and Software: Ensure that the software frameworks used are compatible and optimized for the GPU architecture to avoid performance bottlenecks.

By understanding and addressing these issues, developers can fully harness the power of GPUs in their AI applications, leading to more efficient and powerful solutions.

In summary, while CPUs maintain their relevance in general computing and specific tasks, GPUs have carved out a definitive niche in AI, proving to be indispensable tools in the quest to unravel the complexities of intelligence and machine learning. As AI models grow in sophistication and the demand for faster, more efficient processing escalates, GPUs stand ready to propel the next wave of AI breakthroughs, cementing their status as the engines of modern artificial intelligence.